Using FOIA Data and Unix to halve major source of parking tickets

Intro

This'll be my first blog post on the internet, ever. Hopefully it's interesting and accurate. Please point out any mistakes if you see any!

In 2016, I did some work in trying to find some hotspot areas for parking tickets to see if a bit of data munging could reduce those area's parking tickets. In the end, I only really got one cleaned up, but it was one of the most-ticketed spots in all of Chicago and led to about a 50% reduction in parking tickets.

Here's a bit of that story.

Getting the data through FOIA:

The system Chicago uses to store its parking tickets is called CANVAS. It's short for "City of Chicago Violation, Noticing and Adjudication Business Process And System Support" [sic] and managed by IBM. Its most recent contract started in 2012, expires in 2022, and has a pricetag of over $190 million.

Most of Chicago's contracts and their Requests For Procurement (RFP) PDFs are published online. In CANVAS's contracts it gives a fair amount of info on CANVAS's backend infrastructure, including the fact that it uses Oracle 10g. In other words, a FOIA request can be fulfilled by IBM running some simple SQL.

CANVAS Technical Spec

CANVAS Contract

CANVAS Request For Procurement (RFP) and Contract

With that information at hand, and a couple failed FOIA requests later, I sent this request to get the parking ticket data from Jan 1, 2009 to Mar 10, 2016:

"Please provide to me all possible information on all parking tickets between 2009 and the present day. This should include any information related to the car (make, etc), license plate, ticket, ticketer, ticket reason(s), financial information (paid, etc), court information (contested, etc), situational (eg, time, location), and photos/videos. Ideally, this should also include any relevant ticket-related information stored within CANVAS.

[...]

This information will be used for data analysis for research.

Thanks in advance,

Matt Chapman"

Data

About a month later, a guy named Carl (in a fancy suit), handed me a CD with some extremely messy data in a semicolon delimited file named A50462_TcktsIssdSince2009.txt. The file had info on 17,806,818 parking tickets and spans from Jan 1, 2009 to Mar 10, 2016.

The data itself looks like this:

head -5 A50462_TcktsIssdSince2009.txt

Ticket Number;License Plate Number;License Plate State;License Plate Type;Ticket Make;Issue Date;Violation Location;Violation Code;Violation Description;Badge;Unit;Ticket Queue;Hearing Dispo

39596087;zzzzzz;IL;PAS;VOLV;03/03/2003 11:25 am;3849 W CONGRESS;0976160F;EXPIRED PLATES OR TEMPORARY REGISTRATION;11870;701;Paid;

40228076;zzzzzz;IL;TRK;FORD;03/01/2003 12:29 am;3448 N OKETO;0964170A;TRUCK,RV,BUS, OR TAXI RESIDENTIAL STREET;17488;016;Define;

40480875;zzzzzz;IL;PAS;PONT;03/01/2003 09:45 pm;8135 S PERRY;0964130;PARK OR BLOCK ALLEY;17575;006;Notice;

40718783;zzzzzz;IL;PAS;ISU;03/02/2003 06:02 pm;6928 S CORNELL;0976160F;EXPIRED PLATES OR TEMPORARY REGISTRATION;7296;003;Paid;Liable

Some things to note about the data:

- The file is semicolon delimited.

- Each address is hand typed on a handheld device, often with gloves.

- There are millions of typos in the address column. Including over 50,000 semicolons!

- There is no lat/lon.

What that amounts to is an extremely, extremely messy and unpredictable dataset that's incredibly to difficult to accurately map to lat/lon, which is needed for any sort of comprehensive GIS analysis. There are a bunch of geocoder services that can help out here, but most of them have about a 50% accuracy rate. That said, with the help of a bit of scrubbing, that number can be boosted to closer to 90%. Another post for another time.

Here’s a sample list of Lake Shore Drive typos:

Laks Shore Dr

Lawkeshore Dr West

Lkaeshore Dr

Lkae Shore Dr

Lkae Shore Drive

Lkaeshore Dr West

Original Analysis

I was particularly interested in finding areas that had hotspot areas that stood out. A lot of my time was spent just throwing hacky code at the problem and eventually wrote out two series of (hacky) commands that led to identifying a potentially fixable spot.

Originally, the work and analysis I was doing was with a combination of unix commands and gnuplot. Since then, I've migrated my code to python + matplotlib + SQL. But, for the sake of this blog, I wanted to show the original analysis.

Get count of tickets at addresses and ignoring first two digits:

$ mawk -F';' '{print $7}' all_tickets.orig.txt

| sed -r 's/^([0-9]*)[0-9][0-9] (.*)/\100 \2/'

| sed -r 's/ (BLVD|ST|AV|AVE|RD)$//'

| sort | uniq -c | sort -nr

79320 1900 W OGDEN

60059 1100 N STATE

50594 100 N WABASH

44503 1400 N MILWAUKEE

43121 1500 N MILWAUKEE

43030 2800 N BROADWAY

42294 2100 S ARCHER

42116 1900 W HARRISON

Get count of tickets, by ticket type, at specific addresses:

$ mawk -F';' '{print $9,$7}' A50462_TcktsIssdSince2009.txt

| sed -r 's/ (BLVD|ST|AV|AVE|RD)$//'

| sort --parallel=4 | uniq -c | sort -nr

12510 EXPIRED PLATES OR TEMPORARY REGISTRATION 5050 W 55TH

9636 PARKING/STANDING PROHIBITED ANYTIME 835 N MICHIGAN

8943 EXPIRED PLATES OR TEMPORARY REGISTRATION 1 W PARKING LOT A

6168 EXPIRED PLATES OR TEMPORARY REGISTRATION 1 W PARKING LOT E

5938 PARKING/STANDING PROHIBITED ANYTIME 500 W MADISON

5663 PARK OR STAND IN BUS/TAXI/CARRIAGE STAND 1166 N STATE

5527 EXPIRED METER OR OVERSTAY 5230 S LAKE PARK

4174 PARKING/STANDING PROHIBITED ANYTIME 1901 W HARRISON

4137 REAR AND FRONT PLATE REQUIRED 1 W PARKING LOT A

Both bits of code roughly show that there's something going on at 1100N state street, and 1166 N State St looks particularly suspicious..

So, have a look at the original set of signs:

Things going on that make this spot confusing:

- This is a taxi stand from 7pm to 5am for three cars’ lengths. Parking in a taxi stand is a $100 ticket.

- When this spot isn’t a taxi stand, it’s metered parking – for a parking meter beyond an alleyway.

- It’s possible to pay for parking here after 7pm, which makes it look like parking is acceptable – especially with the “ParkChicago” sign floating there.

- Confusion creates more confusion – if one car parks there, then more cars follow. Cha-ching.

Contacting the 2nd Ward

With all that in mind, I contacted the second ward’s alderman’s office on April 12 explaining this, and got back this response:

"Hello Matt,

[…]The signs and the ordinance are currently being investigated.

In the interim, I highly recommend that you do not park there to avoid any further tickets.

Lisa Ryan

Alderman Brian Hopkins - 2nd Ward"

The Fix

On 1/11/17 I received this email from Lisa:

"Matt,

I don't know if you noticed the additional signage installed on State Street at the 3 Taxi Stand.

This should elevate [sic] any further confusion of vehicle parking.

Thank you for your patience.

Lisa Ryan"

Sure enough, two new signs were added!

The new taxi stand sign sets a boundary for a previously unbounded taxi stand. The No Parking sign explicitly makes it clear that parking here during taxi stand hours is a fineable offense. Neat!

And then there's this guy:

Results

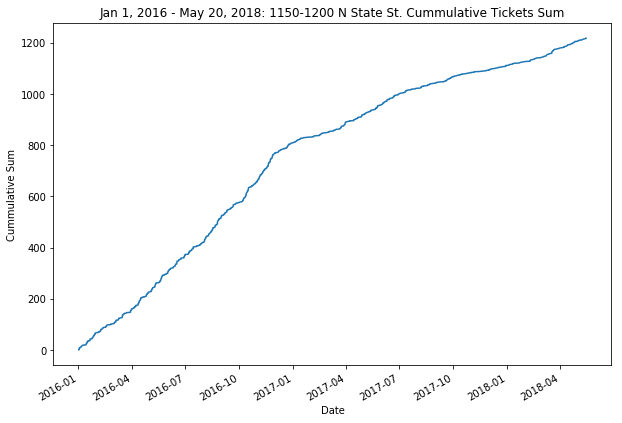

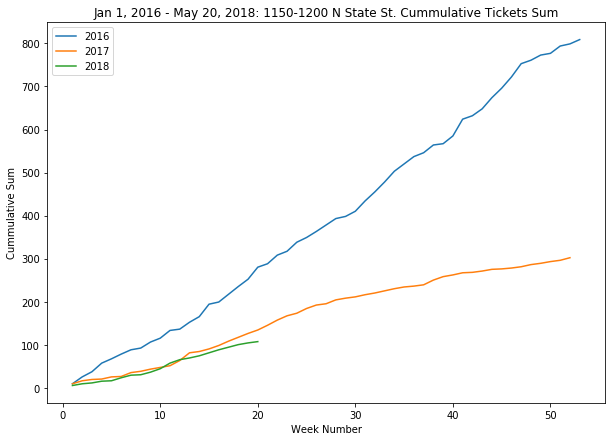

I recently decided to look at the number of tickets at that spot. Armed with a new set of data from another FOIA request, I did some analysis with python, pandas, and SQL. What I found is that the addition of a new sign effectively led to a 50% reduction in parking tickets between 1150 and 1200 N State St. Adding it all up, that's about 400 tickets fewer in 2017 and 200 so far in 2018 compared to 2016. All in all, that's about $60,000 worth in parking tickets!

The drop in slope to about 50% matches perfectly with Lisa’s email:

And then comparing 2016 to 2017:

What's next?

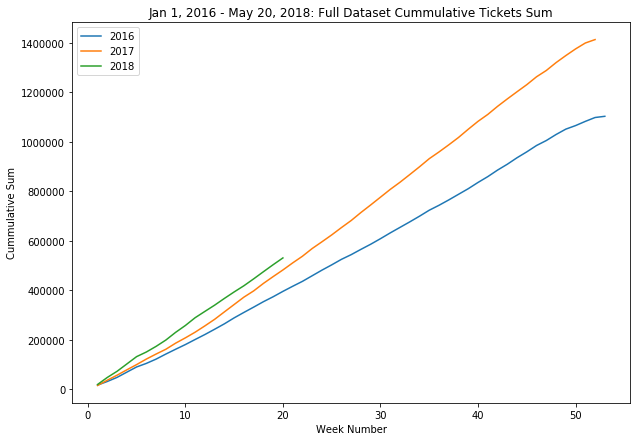

All in all, the number of parking tickets is going up in Chicago, and this work shows that something can be done, even if small.

This work is only on one small section of road, but I'm convinced that similar work can be on a systematic scale. It's mostly just a matter of digging through data and working directly with each ward.

The later analysis done here was also only done on the most recent dataset that the Department of Revenue they gave me. The two datasets have a different set of columns, so the two datasets need to be combined still. I hope to accomplish that soon!

Tags: FOIA, parkingtickets, civics, unix, python